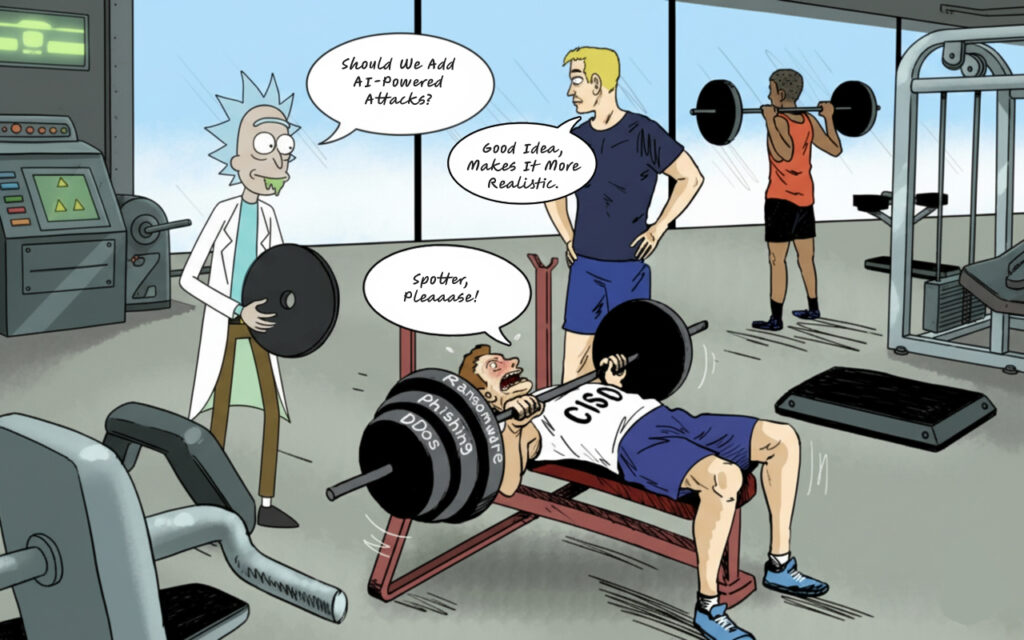

A CISO strains beneath a loaded barbell, the plates etched with Ransomware, Phishing, DDoS, and Zero-Days. Around them, vendors debate not how to help lift the weight, but which new one to add next. One waves a fresh plate labeled AI-powered attacks.

That scene sums up today’s AI in cybersecurity. Vendors rush to automate what’s most visible—detections, alerts, triage—while ignoring the hidden half that truly defines success: context, decision-making, and SOC contextual intelligence.

Our article explores the AI SOC adoption challenges, how the industry fell into the “Known-Known Trap,” and what it takes to build an AI-SOC that genuinely empowers human analysts instead of overwhelming them.

The Known-Knowns: Where Everyone Competes

Walk into any security conference, and you’ll see the same pitch repeated across dozens of booths: “AI-powered threat detection,” “Autonomous alert triage,” “ML-driven anomaly correlation.” These are the Known-Knowns of AI security automation: predictable, rule-based tasks where the inputs are structured, the outputs measurable, and the value proposition easy to demonstrate.

The majority of AI-SOC products focus on three core capabilities:

- Detection and Ingestion: Collecting logs, normalizing data formats, identifying patterns in telemetry

- Alert Aggregation: Clustering noisy events, deduplicating similar alerts, reducing volume

- Initial Triage: Scoring alerts by severity, enriching with threat intelligence, filtering false positives

Products like CrowdStrike or Microsoft Sentinel compete primarily in this space. Some boast that AI security automation can process alerts in under two minutes compared to the 30-40 minutes a human analyst requires. Vendors claim to automate 60–70% of routine SOC tasks, cutting investigation time from hours to minutes.

But automating detection and triage alone doesn’t solve the core AI SOC gaps that cause adoption to fail.

As one security architect put it:

“The brutal truth is that today’s SecOps struggle isn’t a technology problem—it’s a stack integration problem.”

Adding another AI layer that surfaces more alerts doesn’t address the fundamental challenge: analysts still don’t know what to do with the information.

Studies show error rates exceeding 60% when AI-SOC agents handle multi-step investigative workflows. It’s clear proof that AI SOC adoption challenges often start with ignoring context and workflow realities.

The Known-Unknowns—The Forgotten Half of AI Adoption

If detection and triage are the lower half of the AI-SOC stack, the upper half—decision support, workflow integration, and capability building—represents the AI SOC gaps most vendors ignore.

1. Decision Support and SOC Contextual Intelligence

The Gap: AI tells you what happened, but not what to do about it.

Most AI in cybersecurity tools output a risk score or severity label. What analysts actually need is SOC contextual intelligence for understanding why an alert matters in their specific environment and what action to take next.

Poorly integrated AI systems often produce overwhelming false positives, “black-box” conclusions, and trust gaps. Without explainability, analysts can’t validate AI conclusions, creating one of the most persistent AI SOC adoption challenges: lack of trust.

By 2028, Gartner predicts that 50% of SOCs will deploy AI-based decision support systems, recognizing that value lies not in faster detection but in clearer, context-driven decision-making.

2. Workflow Integration—Beyond API Bolt-Ons

The Gap: AI runs in parallel to your actual work, not within it.

Traditional SOAR platforms “excel at automating known processes but struggle with dynamic, investigative work.” This contributes directly to AI SOC adoption challenges, as analysts are forced to maintain rigid playbooks that break under real-world complexity.

True integration requires AI in cybersecurity systems to adapt to your environment (SIEM, EDR, cloud security), forming part of an operational ecosystem rather than another silo.

3. Capability Building and Knowledge Capture

The Gap: Senior analysts’ expertise walks out the door because AI doesn’t learn from them.

Without continuous learning loops, AI security automation stagnates. Future-ready SOCs must enable AI to learn from human feedback, capturing tacit knowledge, contextual decision-making, and investigative reasoning.

This not only bridges critical AI SOC gaps but transforms AI from a static tool into an adaptive, learning partner.

Why Vendors Avoid These Layers

If contextual intelligence and workflow integration are so essential, why do most vendors ignore them? Because solving AI SOC adoption challenges in these areas is harder, costlier, and slower to show ROI.

- Business Model Bias: Decision support has intangible ROI—harder to quantify, harder to sell.

- Development Complexity: Human-AI collaboration demands UX design, feedback governance, and training programs.

- Integration Barriers: Every SOC’s stack is unique; building universal workflow intelligence is exponentially harder.

- Market Dynamics: Vendors focus on what’s easy to demo—detection, not decision-making.

The CISO’s Burden—And the Automation Paradox

Each new “AI feature” adds another tool, another dashboard, another layer of noise. Instead of relief, AI SOC adoption challenges multiply.

Analysts face cognitive overload, eroding trust in AI conclusions. In this AI in cybersecurity paradox, automation increases workload instead of reducing it, thus creating the automation burden.

This explains why only 10% of analysts would trust an AI agent to act autonomously. Without explainability, AI becomes a liability rather than an asset.

CISOs actually need AI-SOC solutions that:

- Lift with humans, not on top of them

- Explain reasoning to build trust

- Adapt to real workflows

- Learn continuously from analysts’ input

- Scale human judgment, not replace it

Such systems address AI SOC gaps holistically, integrating automation with decision-making and SOC contextual intelligence.

What Future-Ready AI-SOC Actually Looks Like

Escaping the Known-Known Trap requires shifting from automation-first to context-first.

1. Unified Decision Support Layer

A contextual intelligence platform aggregates all signals (endpoints, cloud, identity, operational technology) and provides explainable insights. This directly targets AI SOC adoption challenges like fragmented data and opaque alerts.

2. Adaptive Workflow Integration

AI should instrument real analyst behavior, learning from how investigations unfold across tools. This form of AI security automation enhances rather than replaces human workflows.

3. Continuous Learning and Knowledge Capture

Every investigation feeds AI improvement, closing the most persistent AI SOC gaps—stagnation and knowledge loss.

4. Explainable Outcomes and Trust Building

Transparent reasoning and confidence scoring create accountability and trust—the foundation of sustainable AI in cybersecurity adoption.

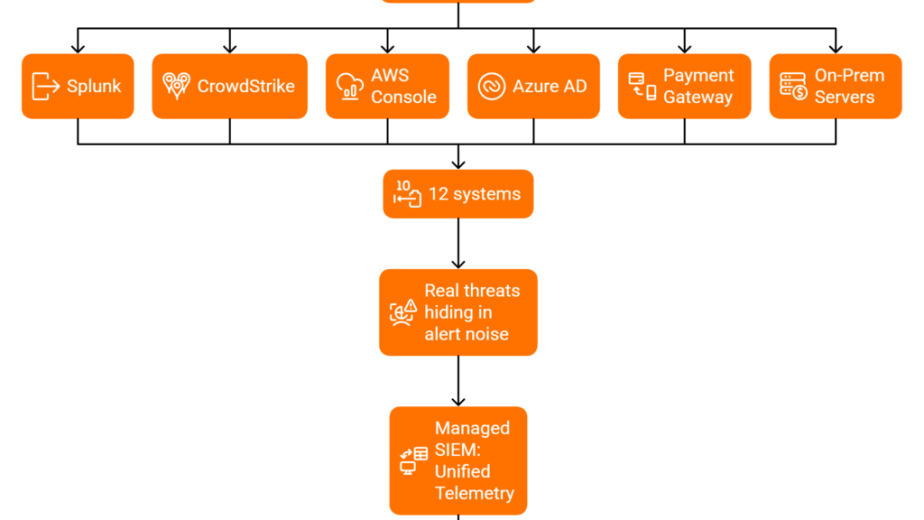

The Architectural Model and What This Requires from Vendors

Emerging best practices define a connected overlay for AI-SOC modernization:

- Foundation Layer: Existing stack (SIEM, EDR, etc.)

- Orchestration Layer: Unified AI data engine

- Intelligence Layer: Decision support + contextual insights

- Human Interface: Explainable outcomes + analyst feedback

This architecture directly resolves AI SOC adoption challenges by aligning automation with human cognition and workflow continuity.

Vendors who wish to close the AI SOC gaps must:

- Invest in human-AI collaboration services

- Prioritize explainability over speed

- Build across heterogeneous stacks

- Enable continuous learning and feedback

- Measure trust and adoption, not just accuracy

Conclusion: Beyond Detection Automation

AI-SOC solutions that automate detections but ignore decision-making are destined to fail. AI SOC adoption challenges persist not because algorithms are weak, but because vendors neglect human cognition, organizational workflow, and trust.

The CISO beneath that barbell doesn’t need another plate. They need a spotter who truly helps lift the weight, powered by AI security automation that understands context, trust, and collaboration.

Reflect on your own SOC:

- Does your AI security automation explain why something matters?

- Does it learn from your analysts’ decisions?

- Do your analysts trust it?

If not, your SOC is caught in the Known-Known Trap.

Ready to see how contextual intelligence and workflow integration solve real AI SOC adoption challenges? Learn about UnderDefense’s approach to human-assisted SOC operations that tackle the hard problems, not just the visible ones.

Need help now?

UnderDefense’s Security Team is available 24/7. Immediate triage, containment, and forensic assistance.

1. What are the main AI SOC adoption challenges organizations face today?

The biggest AI SOC adoption challenges include poor workflow integration, lack of contextual intelligence, limited explainability, and low analyst trust. Many SOCs adopt AI tools that automate detection and triage but fail to support human decision-making or capture institutional knowledge.

2. Why do most AI-SOC vendors focus only on detection and alert automation?

Because it’s easier to demonstrate measurable ROI (faster detections, fewer alerts, and quick time savings). However, this focus creates AI SOC gaps, as vendors often neglect decision support, analyst workflows, and human-AI collaboration, which are crucial for real operational value.

3. How can SOC contextual intelligence improve AI adoption in cybersecurity?

SOC contextual intelligence helps analysts understand why an alert matters, linking technical signals to business impact. By adding context and explainable reasoning, AI becomes a trusted decision-support tool instead of a noisy black box, bridging the biggest AI SOC adoption challenges.

4. What role does explainability play in AI security automation?

Explainability is vital for building trust between analysts and automation systems. Without transparent reasoning, AI security automation creates more work, as analysts must manually verify results. Explainable AI empowers analysts to make faster, more confident, and auditable decisions.

5. How can organizations overcome AI SOC gaps and build a future-ready SOC?

To overcome AI SOC gaps, organizations should prioritize human-centered design: integrate AI into real workflows, enable continuous learning from analyst feedback, and invest in explainable, adaptive systems. The goal is augmented intelligence that enhances human expertise.

The post Why Most AI-SOC Vendors Automate the Wrong Things — and the Real AI SOC Adoption Challenges appeared first on UnderDefense.