You’re comparing three AI security solutions: Vendor A claims 99.9% malware detection, Vendor B automates alert correlation at scale, and Vendor C delivers risk-based decision support across your security posture. Which one truly adds value?

If you chose A or B, you’ve stepped into Gartner’s “feasibility trap” — where vendors optimize for what’s easy to show, not what actually reduces business risk and cost. Our article explains how to move from AI hype to real, measurable cybersecurity outcomes. You’ll learn:

- A simple four-question screening method to identify genuine value.

- A six-point CISO scorecard for comparing vendors.

- And more insights to help you invest where AI truly strengthens security.

The Prism of illusion: Why most AI investments miss the mark

The truth is uncomfortable: decision-making is your bottleneck. Despite the “AI-everywhere” narrative, many AI implementations in security deliver unclear and often unmeasured value. Organizations are accumulating AI-powered dashboards at an alarming rate yet still struggle to answer the most important question: “What should I do about this risk right now?”

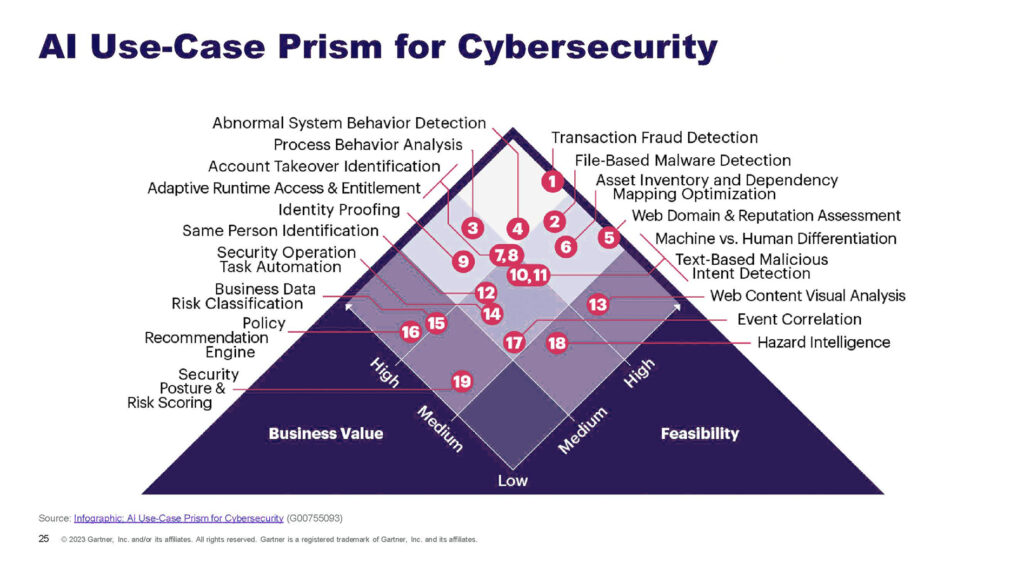

It’s about reframing how security leaders evaluate and prioritize AI investments. Using Gartner’s AI Use-Case Prism as our lens, we’ll show why many solutions cluster in low-value zones, highlight the high-impact AI use cases in cybersecurity that actually move security metrics, and outline how to build toward genuine AI maturity.

The feasibility trap—Why vendors chase the wrong problems

The Comfortable bottom-right

Look at any AI cybersecurity market analysis and you’ll see a pattern: solutions cluster in what Gartner’s Use-Case Prism labels “marginal gains”—high technical feasibility but limited business impact. That’s a predictable outcome of how vendors are incentivized.

The usual suspects:

- File-based malware detection: mature datasets, clear labels (malicious/benign), measurable accuracy rates

- Domain reputation analysis: fast to deploy and easy to demonstrate

- Event correlation at scale: technically impressive and demo-friendly

These features look great in slides and quarterly reports because they’re measurable and relatively simple to build. But they rarely change your security posture in a way that meaningfully reduces business risk.

Why feasibility became the default driver

Market dynamics are straightforward: vendors develop what’s easiest to automate, not what most effectively reduces risk and cost. The result is a proliferation of point solutions that patch narrow technical problems while leaving core security gaps open. Gartner research notes this trend often produces “premium prices for GenAI add-ons” without proportional security benefits—leading to buyer’s remorse when promised value fails to materialize.

Consider alert triage automation. It’s a classic feasibility win: reduce daily alerts from 4,000+ to a few hundred. Security teams initially celebrate a 70% reduction. Six months later, analysts find the remaining alerts are still low-confidence noise. Mean time to detect hasn’t improved. Breach costs remain unchanged. The problem wasn’t solved—it was repackaged. And this demonstrates how AI security automation can be impressive but still fail to deliver real AI cybersecurity value.

The dashboard delusion

Feasibility-first thinking has spawned the “dashboard delusion”: believing more visibility equals better security. Instead, teams get:

- More threat feeds but less actionable intelligence

- More automated investigations but less clarity on priorities

- More ML models but less trust in their outputs

- More dashboards but less strategic security time

These solutions optimize for demos and feasibility, not for the messy, context-dependent reality of enterprise decision-making—a common pitfall for organizations evaluating AI in SOC capabilities without a clear plan for AI prioritization in cybersecurity.

The real value layer—Where business impact lives

Moving left on the prism: The high-value zone

Shift your focus to the left side of Gartner’s prism—the hard territory vendors avoid because it’s slower to demonstrate and requires deep integration. This is where risk classification, policy recommendation, and posture scoring live. These capabilities tell you which risks matter to your specific business and what to do about them.

Why these AI use cases deliver higher value:

- They map technical events to business impact: “Here’s why this matters to our risk profile.”

- They enable strategic allocation of limited resources: focus remediation where it reduces real risk.

- They improve with organizational learning: the more context they gain, the better their recommendations.

- They reduce decision latency: leaders act faster without waiting for repeated analyst triage.

The integration challenge

Vendors avoid these high-value cases because they’re organizationally complex. Effective risk classification requires:

- Deep integration with your environment: asset criticality, data flows, business processes, and risk tolerance

- Governance alignment: mapping findings to compliance and business policies

- Continuous calibration: learning from decisions and incidents

- Cross-functional collaboration: security, IT, business units, and leadership must contribute context

It’s an iterative, messy, and non-linear exercise which is precisely why it’s valuable.

From detection to decision support

The core shift is from detection systems (“What happened?”) to decision support systems (“What should we do about it?”). This is the difference between AI that scales detection and AI in SOC that enhances decision-making and delivers AI cybersecurity value.

Detection-focused AI (high feasibility, lower value):

- Identifies 1,000 potential events

- Flags 200 as “high priority” on technical severity

- Generates a prioritized dashboard

- Leaves analysts to determine actual business risk and response

Decision-support AI (higher value, strategic):

- Identifies 1,000 potential events

- Maps each to asset criticality, exploitability, threat intel, and business context

- Surfaces the 5–10 events that present genuine business risk

- Provides recommended response actions with clear rationale

- Learns from outcomes to improve future prioritization

The latter shifts the conversation from “how many threats did we detect?” to “how much risk did we reduce?”

The missing middle—Decision support and knowledge loops

The cognitive gap in AI cybersecurity

Most AI today lives in the “known-known” space: pattern recognition where datasets are clean and outcomes measurable. But enterprise security operates in “known-unknowns” and “unknown-unknowns”: novel attacks, context-dependent suspicious behavior, supply-chain surprises, and insider risks. This is where cognitive AI—systems that learn why decisions are made—matters and where AI use cases in cybersecurity should focus.

The augmentation vs. automation distinction

Research from Gartner emphasizes that effective AI augments human expertise rather than replaces it. This isn’t just safety theater. Attackers innovate; humans still reason about intent, context, and trade-offs. AI in SOC that properly augments analysts will deliver far greater long-term value than flashy AI security automation that only reduces superficial workload.

Automate:

- Alert triage and enrichment

- Correlation of related events into incident narratives

- Containment for high-confidence threats

- Routine investigation tasks (log collection, timeline reconstruction)

Preserve human judgment for:

- Novel or ambiguous attack patterns

- Unusual privilege escalations lacking clear malicious context

- Suspicious data access that could be legitimate business activity

- Strategic decisions about risk acceptance and policy changes

The knowledge loop: Learning from decisions

Mature AI systems continuously learn from decisions and outcomes. Most AI is trained once and deployed with occasional updates; it rarely learns from an organization’s actual decision history.

High-value AI should:

- Track decision outcomes and overrides

- Calibrate risk scoring against real incident impact

- Improve recommendations based on what worked and what didn’t

- Adapt to business changes and evolving threats

This feedback loop turns AI from static detection into an adaptive decision-support engine that compounds value over time and proves AI cybersecurity value through results.

The CISO compass—Navigating AI investment decisions

Treat AI purchases as risk-reduction projects, not feature shopping. Ask up front: “What actually moves the needle on risk and cost?” To turn that principle into action, follow a simple, repeatable flow: screen capabilities, score vendors, mind critical distinctions, and commit organizationally.

To decide whether a capability is worth piloting, answer these four questions:

- Business value: Will it cut breach costs, free analyst hours, improve compliance, or shorten dwell time?

- Feasibility: Do we have the data, integrations, talent, and workflow readiness?

- Success metrics: What are the baselines and audit checkpoints at 30/90/180 days?

- Human role: Which decisions stay human, how can analysts override AI, and how transparent are recommendations?

If a capability can’t be tied to value, feasibility, metrics, and clear human controls, deprioritize it. Once a capability passes screening, evaluate vendors with this weighted checklist to compare real-world fit and AI cybersecurity value: risk reduction (20%), proof of efficacy (20%), tool consolidation (15%), compliance readiness (15%), vendor stability (15%), and post-sale partnership (15%). Use these weights to move discussion from demos to measurable outcomes.

When scoring vendors, keep two distinctions front and center:

- Detection scale vs. decision enhancement: Scaling detection increases coverage; decision-enhancing AI ties signals to context and prescribes actions — that’s where practical ROI comes from.

- Automation theater vs. decision support: Avoid flashy, opaque demos. Favor solutions that offer explainability, human oversight, and continuous learning.

Even the best tech fails without organizational change. Commit to:

- Metrics discipline — stop counting alerts; measure MTTD, MTTR, false positives, incidents prevented, and cost per incident avoided.

- Transparency & validation — require comparable deployments, post-implementation audits, and governance aligned with AI TRiSM principles.

- Design for human excellence — use AI to remove drudgery so analysts focus on threat hunting, detection engineering, and strategy.

Taken together, this flow—screen, score, distinguish, commit—creates a practical compass for AI prioritization in cybersecurity: investments that are measurable, implementable, human-centered, and vendor-backed will reduce risk and deliver real security value.

Conclusion: From detection to decision—The real ROI of AI

The AI revolution in cybersecurity is real, but its value isn’t in detection accuracy, automation percentages, or prettier dashboards. Real value enables human expertise to operate at scale with precision and speed.

Shift from feasibility-driven AI to value-driven AI by:

- Identifying high-value AI use cases in cybersecurity that deliver measurable outcomes

- Avoiding the feasibility trap and automation theater

- Building toward AI maturity through phased implementation

- Measuring success with business metrics that matter to boards and CFOs

Ready to move beyond detection theater and build genuine AI value? Explore the UnderDefense MAXI platform to see how contextual intelligence, risk-based prioritization, and human-centered automation can transform your security operations or download our AI SOC Promise vs. Reality Practical Guide to evaluate current and prospective AI investments.

Need help now?

UnderDefense’s Security Team is available 24/7. Immediate triage, containment, and forensic assistance.

1. What is the “feasibility trap” and why does it matter?

The feasibility trap is when vendors optimize for easy-to-demonstrate metrics (detection rates, flashy automation) instead of real business impact—it leads to purchases that increase noise without reducing breach risk or costs.

2. How do I know an AI capability will deliver measurable value?

Require a business outcome (e.g., reduced breach cost, fewer analyst hours, lower dwell time), baseline metrics, and a post-implementation audit plan (30/90/180 days) before buying or piloting.

3. When should I prioritize detection-scaling vs. decision-enhancing AI?

Scale detection if you’re missing signal volume; prioritize decision-enhancing AI when your challenge is turning signals into context, recommended actions, and measurable outcomes.

4. How can I spot “automation theater” during vendor demos?

Watch for opaque models, no clear success metrics, inability to explain recommendations, and focus on volume of alerts rather than outcomes—demand explainability, test data from comparable customers, and a rollback/override plan.

5. What organizational changes are required to get real AI value?

Adopt metrics discipline (MTTD, MTTR, incidents prevented, cost per incident), enforce transparency and validation (governance, AI TRiSM principles, audits), and redesign analyst workflows so AI removes drudgery and enhances human decision-making.

The post Beyond Detection: How to Prioritize Real AI Value in Cybersecurity appeared first on UnderDefense.